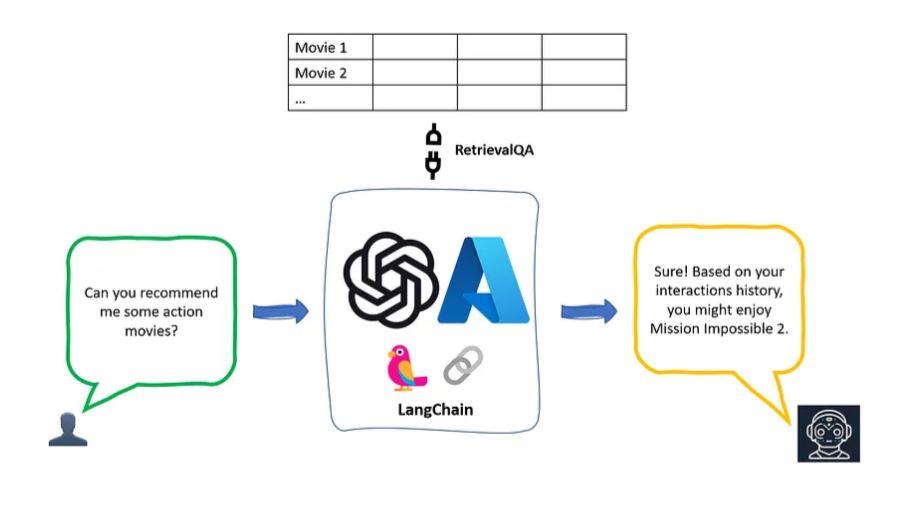

Recently, recommendation systems have improved to provide more detailed and personalized suggestions using large language models (LLM). However, combining LLMs’ general knowledge and reasoning skills into these systems is still difficult. This paper introduces a new model called RecSysLLM, a recommendation model that uses LLMs. RecSysLLM keeps the LLM’s ability to reason and its knowledge and adds knowledge specific to recommendations.

This is done through special methods of handling data, training the model, and making inferences. As a result, RecSysLLM can use LLMs’ abilities to recommend items in a single, efficient system. Tests on standard measures and real-world situations show that RecSysLLM works well. RecSysLLM is a hopeful method for creating recommendation systems that fully use pre-trained language models.

Enhancing Recommender Systems with Large Language Models

Recommender Systems (RSs) are important tools for personalized suggestions in many areas, like online shopping and streaming services. New developments in language processing have led to Large Language Models (LLMs), which are very good at understanding and creating text that sounds like a person wrote it. RSs are known for being very good and efficient in well-defined areas, but they aren’t adaptable and can’t give suggestions for data they haven’t seen before.

On the other hand, LLMs are aware of the context and can adapt well to data they haven’t seen. Combining these technologies gives you a powerful tool to give relevant suggestions that fit the context, even when there isn’t much data. This proposal looks into how LLMs can be added to RSS. It introduces a new method called Retrieval-augmented Recommender Systems, which uses the best parts of models based on retrieval and generation to improve how well RSs can give relevant suggestions.

Enhancing LLMs to construct personalized reasoning graphs

Recommendation systems are designed to give users suggestions that are relevant to them. However, these systems often struggle to explain their decisions and understand the deeper connections between a user’s actions and profile. This paper introduces a new method that uses large language models (LLMs) to create personalized reasoning graphs. These graphs connect a user’s profile and behavior using cause-and-effect and logical connections, making it easier to understand their interests.

Our method, called LLM reasoning graphs (LLMRG), has four parts:

- Chained graph reasoning

- Divergent extension

- Self-verification and scoring

- Improving the knowledge base

The created reasoning graph is then turned into code using graph neural networks. This code is used as extra input to improve traditional recommendation systems without needing more information about the user or the items.

Our method shows how LLMs can be used to make recommendation systems that are more logical and easier to understand through personalized reasoning graphs. LLMRG lets recommendations benefit from traditional recommendation systems and reasoning graphs created by LLMs. We show that LLMRG works well on standard measures and real-world situations to improve basic recommendation models.

Tutorial on Large Language Models for Recommendation

Foundation Models like Large Language Models (LLMs) have greatly improved many fields of study. They are especially useful for recommender systems, where they help provide personalized suggestions. LLMs can handle different tasks in recommendation systems, such as predicting ratings, recommending in sequence, making straightforward recommendations, and generating explanations. They do this by turning these tasks into language instructions.

LLMs are also great at understanding natural language. This means they can understand what users prefer, descriptions of items, and the context of information to give better and more relevant suggestions. This leads to users being more satisfied and engaged.

This tutorial will teach you about Foundation Models like LLMs for recommendation. We’ll talk about how recommender systems have evolved from simple models to complex ones and then to large models. We’ll also discuss how LLMs make it possible to generate recommendations, which is different from the traditional way of discriminating recommendations.

We’ll also show you how to build recommender systems that use LLMs. We’ll cover many aspects of these systems, including preparing data, designing the model, pre-training the model, fine-tuning and prompting, learning from multiple modes and tasks, and trustworthy aspects of these systems, like fairness and transparency.

Evaluations and limitations

Pre-trained language models (PLMs) like BERT and GPT are good at understanding text and know much about the world. This makes them very good at adapting to different tasks. In this study, we suggest using these powerful PLMs as recommendation systems. We use prompts to change the task of recommending based on sessions into a multi-token cloze task, a fill-in-the-blank task.

We tested this method on a dataset for recommending movies in zero-shot and fine-tuned settings. In the zero-shot setting, there is no training data available. We found that PLMs did much better than just recommending randomly. However, we also noticed a strong bias in language when using PLMs as recommenders.

In the fine-tuned setting, there is some training data available. This reduced the bias in language, but PLMs didn’t do as well as traditional recommendation systems like GRU4Rec.

Our findings show that there are opportunities for using this new method, but challenges also need to be addressed.

Content Reference:

- https://arxiv.org/abs/2308.10837?source=post_page6cfad2cf9dc7

- https://dl.acm.org/doi/abs/10.1145/3604915.3608889?source=post_page6cfad2cf9dc7

- https://dl.acm.org/doi/abs/10.1145/3604915.3609494?source=post_page6cfad2cf9dc7