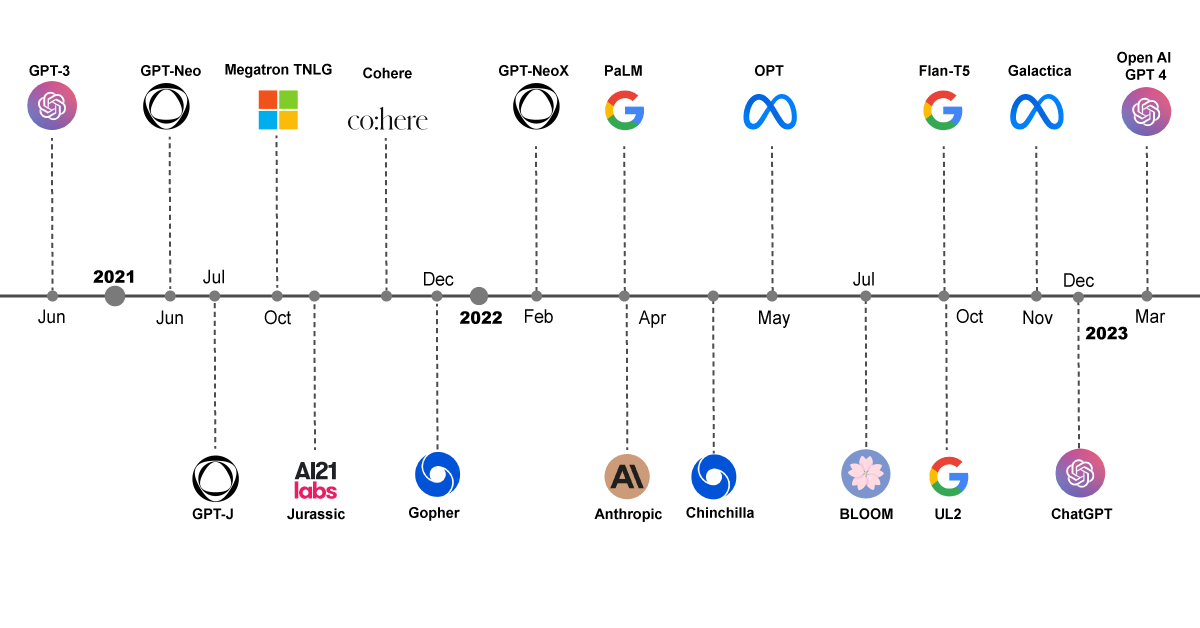

The progress in Large Language Models (LLMs) is amazing, especially with the introduction of GPT-4 and DALL-E. These models have started a new era in AI and LLMs. GPT-4 is a huge model with 100 trillion parameters and was trained on 45 TB of text data. It’s more than 100 times bigger than the previous model and is 90% accurate. This progress has opened up new possibilities for the future of AI and language processing.

ChatGPT, an AI language model, shows that we’re close to breaking through the technology barrier with AI. Even though progress has been slow and steady over the years, the big challenge is still to successfully use AI on a large scale. As with earlier efforts to use AI, the hardest part is putting it into practice.

What are LLMOps?

LLMOps is a part of MLOps that focuses on Large Language Models (LLMs). It’s about the systems and operations needed to fine-tune basic models and use these improved models in a product. Even though LLMOps might not be a new idea for people who are familiar with MLOps, it is a more specialized part of this field. By looking at LLMOps more closely, we can better understand the specific needs for fine-tuning and using these types of models.

Big AI models like GPT-3 have 175 billion parameters and need a lot of data and computing power to train. For example, it would take 355 years to train GPT-3 on just one NVIDIA Tesla V100 GPU. Fine-tuning these models is a complex task, even though it doesn’t need as much data or computation. To work with these big models, you need infrastructure that can use many GPU machines at the same time and handle huge datasets. So, having a strong infrastructure is important for using and fine-tuning AI models like GPT-3.

On Twitter, people have been talking about the high costs of running ChatGPT, a language model developed by OpenAI. This shows the unique computing needs of big models like ChatGPT. These models often need complex systems of models and safeguards to give accurate results. So, running these models can be expensive.

Key Challenges in Operationalizing LLMs

LLMs, or large language models, are powerful tools that can provide valuable insights and predictions. However, several challenges must be overcome to operationalize these models effectively. These challenges include:

- Model Size: LLMs can have millions of parameters, which require significant computational resources for both training and inference. This can be expensive and time-consuming.

- Dataset Management: LLMs are trained on large and complex datasets. Managing these datasets can be challenging, as they need to be cleaned, pre-processed, and organized in a way that is suitable for training.

- Continuous Evaluation: To ensure that LLMs are performing as expected, it’s important to continuously evaluate their accuracy using various metrics. Regular testing and evaluation are necessary to identify any issues and make improvements.

- Retraining: LLMs require active feedback loops, where new data is used to update the model and improve its performance. This involves collecting new data, validating it, and retraining the model regularly.

By addressing these challenges, organisations can effectively operationalize LLMs and leverage their power to improve decision-making, increase efficiency, and drive innovation.

The question of whether it is possible to run and fine-tune large language models is a pressing concern. However, with technological advancements, running 13B LLMs like LLaMA on edge devices, such as the MacBook Pro with an M1 chip, has become nearly effortless. Soon, it may be possible to run 13B+ LLMs on mobile devices locally.

LLM Open Sources Training Frameworks

- 𝐀𝐥𝐩𝐚: Alpa is a powerful system designed for training and serving large-scale neural networks. With the ability to scale neural networks to hundreds of billions of parameters, breakthroughs like GPT-3 are now possible. However, training and serving these massive models require complex distributed system techniques. Alpa addresses this challenge by automating large-scale distributed training and serving with minimal code.

Alpa is the perfect solution for businesses and researchers who want to harness the power of large-scale neural networks without the hassle of complicated distributed system techniques. With Alpa, you can easily train and serve massive models with just a few lines of code, saving you valuable time and resources.

Alpa – https://github.com/alpa-projects/alpa

Serving OPT-175B, BLOOM-176B and CodeGen-16B using Alpa: – https://alpa.ai/tutorials/opt_serving.html

- DeepSpeed: DeepSpeed is a user-friendly suite of deep learning optimization software that empowers users with unparalleled scale and speed for training and inference in deep learning. With DeepSpeed, you can achieve unprecedented performance and efficiency in your deep learning projects.

Megatron-LM GPT2 tutorial: https://www.deepspeed.ai/tutorials/megatron/

DeepSpeed – https://github.com/microsoft/DeepSpeed

- Mesh TensorFlow (MTF): MTF is a distributed deep learning language that enables the specification of a wide range of distributed tensor computations. The primary objective of MTF is to formalize and implement distribution strategies for your computation graph across your hardware/processors. For instance, you can split the batch over rows of processors and distribute the units in the hidden layer across columns of processors.

MTF is implemented as a layer over TensorFlow, making it easy to use and integrate into your existing workflow. With MTF, you can distribute your training process across multiple devices or machines, allowing you to train larger and more complex models efficiently.

If you’re looking to scale up your deep learning workloads, MTF can help you achieve faster and more efficient training times. Additionally, MTF is designed to be highly flexible, allowing you to customize your distribution strategies to suit your specific needs.

So, if you want to take your distributed deep learning to the next level, consider incorporating Mesh TensorFlow into your workflow today!

Mesh TensorFlow: https://github.com/tensorflow/mesh

- 𝐂𝐨𝐥𝐨𝐬𝐬𝐚𝐥-𝐀𝐈: A powerful platform that offers a wide range of parallel components, designed to simplify the process of writing distributed deep learning models. With its user-friendly tools and intuitive interface, It allows users to easily kickstart distributed training and inference with just a few lines of code.

Colossal-AI: https://colossalai.org

Open source solution replicates the ChatGPT training process. Ready to go with only 1.6GB GPU memory and gives you 7.73 times faster training: https://www.hpc-ai.tech/blog/colossal-ai-chatgpt

Content Reference :

Top Google searches –

- https://valohai.com/blog/llmops

- https://www.fiddler.ai/blog/llmops-the-future-of-mlops-for-generative-ai

Top LinkedIn Post

- https://www.linkedin.com/feed/update/urn:li:activity:7047185045303738368/?updateEntityUrn=urn%3Ali%3Afs_feedUpdate%3A%28V2%2Curn%3Ali%3Aactivity%3A7047185045303738368%29

- https://www.linkedin.com/feed/update/urn:li:activity:7047449940192591872/?updateEntityUrn=urn%3Ali%3Afs_feedUpdate%3A%28V2%2Curn%3Ali%3Aactivity%3A7047449940192591872%29